How to enable overlay networking for Docker containers?

The idea of Docker containers running on multiple daemons and communicating between seems very compelling. I processed the idea for a long time how to enable overlay networking and container orchestration for the multiple Docker daemons since not every project needs Kubernetes machinery and doesn't justify cost and complexity.

I have seen a lot of projects in this category that are deployed and orchestrated with glue scripts, pipelines, and other stuff to make it happen. In the longer run maintenance of these projects becomes a hurdle.

Since it is not a standardized process as the company engineers come and go - every person brings it's own way of doing stuff and hence multiple projects become a stress and a burden to maintain, improve, and work on them at all.

That is the reason why simplecontainer was born. I saw an opportunity to fill the gap where you want to deploy on cheap virtual machines but still orchestrate everything like a pro.

If you are not familiar with simplecontainer you should check it out.

The challenge to enable container overlay networking for simplecontainer was something I had been researching for some time.

I was testing out a few options and in the end, was deciding how to enable overlay networking with:

- Slackhq Nebula

- Flannel

Other players like Cilium and Calico left support for Docker containers in favor of the Kubernetes networking implementations only. Nature way of how things should evolve since Kubernetes provides API and Key value store out of the box - hence maintenance and overhead of developing became less complex in terms of keeping the state and bootstrapping quickly.

Slackhq Nebula

Slackhq Nebula got my attention since it provides secure peer-to-peer networking, making one overlay network over non-secure networks like the Internet.

It started in Slack as a side project and became its own product.

It's based on the UDP punch-holing depending on the lighthouse server to act as a police officer between peers. It has its own PKI infrastructure for authentication between peers.

All in all very good solution to enable overlay networking over secure network using PKI infrastructure.

I abandoned Nebula since It doesn't have integration directly to the Docker networking that enables extending the Docker network entity throughout other machines on the same or another network.

It had a different use case in mind when it was created.

Flannel

Flannel is network fabric made for the purpose: Of enabling overlay networking for containers. It is used for the k3s as the only solution for overlay networking between nodes.

Flannel is using the etcd as the key-value store to handle the state. To enable overlay networking etcd needs also to be distributed so every node running the flannel has a picture of the whole network so It is able to make decisions that will not hurt anyone.

Flannel when started and configured correctly does a great job of overlay networking enabling different backends:

- vxlan

- wireguard (encrypted overlay network but requires wireguard installed on the system)

- host-gw

- udp

- other experimental backends: https://github.com/flannel-io/flannel/blob/master/Documentation/backends.md (Read more here).

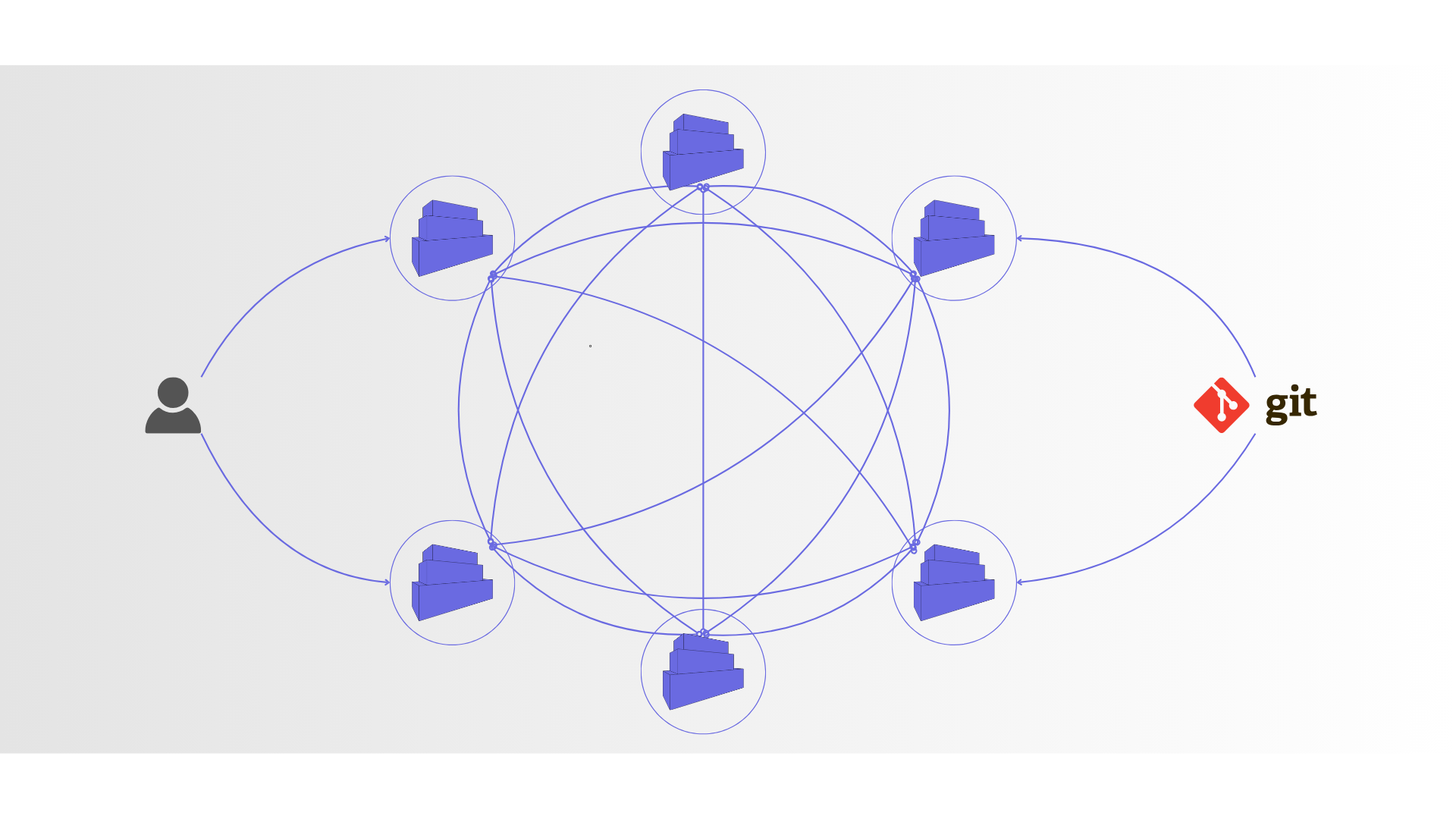

How I integrated flannel into simplecontainer?

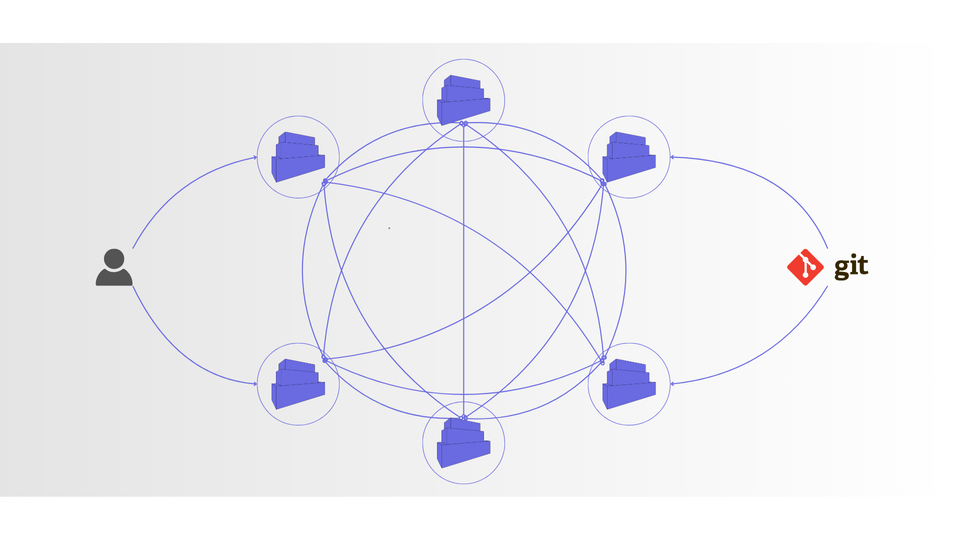

Since simplecontainer itself is using RAFT and etcd store to keep and replicate data across nodes - I went with etcd as the single node running on the node where simplecontainer is started was an obvious choice since flannel is already integrated to work with etcd.

Data is replicated via RAFT and put back in the etcd single instance on every node running simplecontainer.

When simplecontainer is started with commands in example below:

smrmgr start -a smr-agent-1 -d simplecontainer-1.qdnqn.com -n simplecontainer-1.qdnqn.com- Single node ETCD starts

- RAFT starts

- Flannel starts and informs about the decisions via RAFT

- A Docker network named cluster is created if not already existing

Now when a node wants to join the cluster for example:

smrmgr start -a smr-agent-2 -d simplecontainer-2.qdnqn.com -n simplecontainer-2.qdnqn.com -j simplecontainer-1.qdnqn.com:1443Everything starts the same and informs existing member of the cluster that it wants to join and everyone gets information about the new member.

Now containers between these nodes can talk without any issues using integrated DNS from the simplecontainer itself.

If for the purpose of the demonstration two containers are running and connected to the docker cluster network:

- Running on Node 1: Group:

busyboxContainer:busybox-busybox-1 - Running on Node 2: Group:

busyboxContainer:busybox-busybox-2

They can say hello to each other on these domains even if they are miles apart.

ping cluster.busybox.busybox-busybox-1.private

ping cluster.busybox.busybox-busybox-2.privateEverything is made possible with flannel. Guys thank you for this cool solution and keeping the docker support still.

Anyhow simplecontainer supports:

- Multiple Docker daemons in cluster running on the different virtual machines

- GitOps deployments

With this in mind you can easily use GitOps approach to deploy containers on different machines using one tool.

Read more articles at the: